[SIGIR-23] Curse of Low Dimensionality in Recommender Systems

1. Problem Definition

Lets consider how to measure the quality of recommender systems. The first thing that comes to mind is that higher recommendation accuracy generally indicates better quality. However, diversity, fairness, and robustness also play crucial roles in determining the overall quality of recommender systems.

User-item embeddings are an essential component of recommender systems, and the method of embedding these interactions is important. Additionally, due to the low dimensionality of user and item embeddings, particularly in dot-product models like matrix factorization, certain issues arise in recommender systems because low dimensionality embeddings limit the expressive power of these models.

2. Motivation

The motivation of this paper arises from the recognition that recommender systems often face challenges beyond accuracy, such as issues related to diversity, fairness, and robustness. The authors argue that many of these problems are partially caused by the low-dimensionality of user and item embeddings, particularly in models like matrix factorization that use dot-product-based ranking. The motivation of this paper is to empirically and theoretically demonstrate the necessity of sufficient dimensionality for user - item embeddings to achieve high-quality recommender systems. Please see the Reserach Gap section for motivation behind of this paper written undeer Preliminary Research. Also, this papers motivation is explained by deriving insights using these terms:

Dot-Product Model:

In recommender systems, the dot product is used to compute the preference score between a user and an item by taking the inner product of their respective embedding vectors. These embeddings represent users and items in a shared, lower-dimensional space, enabling the system to model complex relationships in a computationally efficient way.

For a user u and an item v, the preference score is:

$ \hat{r}_{u,v} = \langle \phi(u), \psi(v) \rangle $

where $\phi(u) $ and $\psi(v) $ are the embedding vectors of the user and item, respectively. The goal is to approximate a large, sparse interaction matrix by learning these user and item vectors.

Dimensionality:

Dimensionality in dot-product models refers to the number of latent factors used to represent user and item embeddings. The dimensionality (d) of these embeddings controls how well the model can capture relationships between users and items. More specifically, Higher dimensionality allows the model to capture more detailed and complex interactions, but at the cost of higher computational complexity. If the dimensionality is too low, the models lose their ability to capture complex patterns in user preferences, leading to “bounded” expressiveness. For instance, when the dimensionality is reduced to one, all users and items are essentially reduced to scalars, and the predicted ranking becomes oversimplified (reflecting only popularity or its reverse).

Since there were some research gap considering the preliminary research explained below, this situation creates a motivation.

Preliminary Research:

These embeddings can be learned using various models, such as:

- Matrix Factorization (MF): A basic dot-product model that directly computes user-item preferences.

- Neural Networks or Variational Autoencoders (VAEs): These can also be interpreted as dot-product models, where the preference is calculated via learned embeddings. VAEs use fully-connected layers and activation functions (e.g., softmax) to generate item scores. The final recommendation ranking is still determined by the order of the dot products between the user and item embeddings.

Research Gap:

The research gap identified in the paper is that most prior studies on recommender systems focus primarily on improving ranking accuracy while overlooking the impact of dimensionality in dot-product models.

-

Dimensionality is Underexplored: While other hyperparameters like learning rates and regularization weights are frequently tuned, the dimensionality of user and item embeddings is often ignored or not studied in depth. Researchers haven’t fully explored how dimensionality affects other important aspects of recommender systems such as diversity, fairness, and robustness.

-

Limited Investigation of High-Dimensional Models: Although high-dimensional models have shown improvements in ranking accuracy, there has been little examination of their potential to reduce biases like popularity bias or improve personalization and diversity in recommendations.

-

Misconceptions About Overfitting: It is often assumed that increasing dimensionality might lead to overfitting due to sparse data. However, some empirical results suggest that higher-dimensional models can still perform well, motivating the authors to investigate this further.

3. Method

This section presents a theoretical analysis focused on understanding the impact of dimensionality, particularly in the dot-product context, for recommender systems. The analysis considers the rank of the interaction matrix and the number of representable rankings constrained by dimensionality.

Theoretical Investigation

Theoretical investigation explores representable rankings over item vectors in $\mathbb{R}^d$, highlighting the limitations and expressive power of low-dimensional spaces in representing these rankings.

Main Concepts

Representable Rankings

A ranking is representable if there exists a query vector ( $q \in \mathbb{R}^d$ ) such that the items are ordered based on the inner products ( $\langle q, v_i \rangle$ ), where ( $v_i$ ) are the item vectors.

Bounding the Number of Representable Rankings

The focus is on estimating how many distinct rankings can be represented with a set of item vectors in low-dimensional space:

- Upper Bound: For $n$ item vectors in $\mathbb{R}^d$, the number of representable rankings of size ( $K$ ) is at most $n^{\min(K, 2^d)}$. Increasing dimensionality enhances expressive power.

- Lower Bound: There exist $n$ item vectors such that the number of representable rankings in $\mathbb{R}^d$ is $n^{\Theta(d)}$, indicating expressivity increases with dimensionality.

Key Theoretical Insights

Dimensionality and Expressive Power

Higher-dimensional spaces allow exponentially more representable rankings, making the system capable of capturing more diversity and fairness. In contrast, low-dimensional spaces limit representability, potentially leading to insufficient diversity.

Popularity Bias

In low-dimensional spaces, rankings may become biased towards popular items, compressing the range of rankings and amplifying bias.

Structural Analysis of Representable Rankings

The investigation utilizes hyperplane arrangements and polyhedral representations (e.g., polyhedral cones) to characterize the structure of representable rankings and estimate bounds.

Failures in Low-Dimensional Spaces

Low-dimensional spaces may lead to overfitting to popular items and fail to represent diverse rankings, highlighting the need for higher-dimensional models or alternative approaches.

Outline of the Proof

Dot-Product Representation of Rankings

A ranking is derived from the inner products between item vectors ( $v_i \in \mathbb{R}^d$ ) and a query vector ( $q \in \mathbb{R}^d$ ). Items are ranked based on ( $\langle q, v_i \rangle$ ), and the number of rankings depends on how many ways ( $\langle q, v_i \rangle$ ) can be ordered, that is, and the number of distinct rankings corresponds to the number of unique permutations of these inner products. Each ranking represents a specific permutation, and the query vector ( $q$ ) determines which permutation applies.

Geometric Interpretation via Hyperplanes

The query space ( $q \in \mathbb{R}^d$ ) is divided into regions by hyperplanes, where each region corresponds to a specific ranking. A hyperplane is defined by $\langle q, v_i \rangle = \langle q, v_j \rangle$, representing the set of query vectors where items ( $i$ ) and ( $j$ ) are equally ranked. Each hyperplane divides the query space into two half-spaces: one where ( $\langle q, v_i \rangle > \langle q, v_j \rangle$ ) and another where ( $\langle q, v_i \rangle < \langle q, v_j \rangle$ ). The arrangement of these hyperplanes partitions the query space into ranking regions.

Upper Bound Using Hyperplane Arrangements

The maximum number of ranking regions corresponds to the number of regions formed by the hyperplane arrangement. Key geometric result can be expressed as follows. The number of regions formed by $n$ hyperplanes in $\mathbb{R}^d$ provides an upper bound on the number of distinct rankings with ( $O(n^{d})$ ). For ranking $K$ items, the number of regions scales as ( $n^{\min(K, 2d)}$. Since there are ( $\binom{n}{2}$ ) pairs of items, there are at most $\binom{n}{2}$ hyperplanes. Thus, the number of ranking regions scales as O(($\binom{n}{2}$){d}), which simplifies to $O(n^{2d})$ ). For top- $K$ rankings (i.e., considering only the top ( $K$ ) items), the number of regions is bounded by $O(n^{\min(K, 2d)})$ .

Lower Bound

A lower bound is demonstrated by constructing specific item vectors ( $v_i$ ) explicitly to maximize the number of distinct rankings: Arrange the vectors ( $v_1, v_2, \dots, v_n$ ) such that every pair ( ($v_i, v_j$) ) is linearly independent. This ensures that every possible ordering of $\langle q, v_i \rangle$ can be achieved by varying $q$ in $\mathbb{R}^d$. So, The number of representable rankings grows as $n^{\Theta(d)}$, demonstrating that the upper bound is tight under optimal vector arrangements.

Example Scenario

Consider three items in a 2D space ( $\mathbb{R}^2$ ):

- Item 1: $v_1 = (1, 0)$

- Item 2: $v_2 = (0, 1)$

- Item 3: $v_3 = (1, 1)$

A query vector ( q = (q_1, q_2) ) ranks the items based on the dot products:

- $\langle q, v_1 \rangle = q_1 $

- $ \langle q, v_2 \rangle = q_2 $

- $ \langle q, v_3 \rangle = q_1 + q_2 $

The ranking depends on the values of $q_1$ and $q_2$.

Geometric Interpretation Using Hyperplanes

Each pair of items defines a hyperplane:

- $v_1$ and $v_2$ : $q_1 = q_2$ (diagonal line)

- $v_1$ and $v_3$: $q_2 = 0$ (horizontal axis)

- $v_2$ and $v_3$: $q_1 = 0$ (vertical axis)

These hyperplanes divide the query space into regions, where each region corresponds to a different ranking of the items.

Regions and Rankings

The query space ( $q \in \mathbb{R}^2$ ) is divided into four regions by the three hyperplanes, each corresponding to a different ranking. For example:

- Region 1: $q_1 > q_2 > 0$ results in the ranking Item 1 > Item 3 > Item 2.

This example demonstrates how the number of hyperplanes and the dimensionality of the query space influence the number of distinct rankings. In other words, in higher dimensions, the number of hyperplanes increases combinatorially, leading to exponential growth in the number of ranking regions.

4. Experiment

Baseline Model:

- Implicit Alternating Least Squares (iALS), a widely adopted matrix factorization algorithm for recommendation systems had been used. iALS is known for its practical utility and implementation in distributed frameworks such as Apache Spark.

- Low-Dimensional Models: These involve a smaller latent space where the embeddings for users and items have fewer dimensions, resulting in faster computation and lower memory requirements.

- High-Dimensional Models: These involve a larger latent space, capturing more complex interactions between users and items. However, they are computationally expensive, causing iALS to slow down.

To address the computational challenges of high-dimensional models, a block coordinate descent solver is used as an alternative optimization method.

Datasets

Experiments are conducted on three real-world datasets from different domains:

- MovieLens 20M (ML-20M):

- A movie recommendation dataset.

- Explicit ratings are binarized by keeping only user-item pairs with ratings greater than 4.

- Million Song Dataset (MSD):

- A dataset for music recommendation.

- All user-item interactions are considered.

- Epinions:

- A dataset based on user reviews and ratings.

- User-item pairs with ratings greater than 4 are retained, and only users and items with more than 20 interactions are included.

Preprocessing and Evaluation

- Implicit Feedback Conversion: Explicit ratings are transformed into implicit feedback by binarizing the data based on predefined thresholds.

- Filtering Criteria: For Epinions, users and items with fewer than 20 interactions are excluded to ensure meaningful data.

- Evaluation Protocol: The evaluation strictly follows the strong generalization setting proposed by Liang et al., ensuring that the performance is assessed on unseen user-item pairs.

First, experiments were conducted to demonstrate the reasoning behind the theoretical analysis.

Personalization and Popularity Bias Results

Personalization is a critical goal of recommender systems, and this experiment contrasts it with popularity bias (the system’s tendency to recommend popular items).

- Metrics Measured:

- Recall@K (ranking quality)

- ARP@K (Average Recommendation Popularity, measuring bias)

- Results:

- Low-dimensional models:

- High popularity bias, recommending mainly popular items, leading to anti-personalization.

- High-dimensional models:

- ML-20M dataset: Ranking quality plateaued at low dimensionality ($d$ = 1,024). Severe popularity bias due to long-tail items. A dimensionality of d = 512 balanced ranking quality and bias reduction.

- MSD and Epinions: Increasing dimensionality continually improved ranking quality. High-dimensional models significantly enhanced personalization and reduced popularity bias.

- Low-dimensional models:

Diversity and Item Fairness

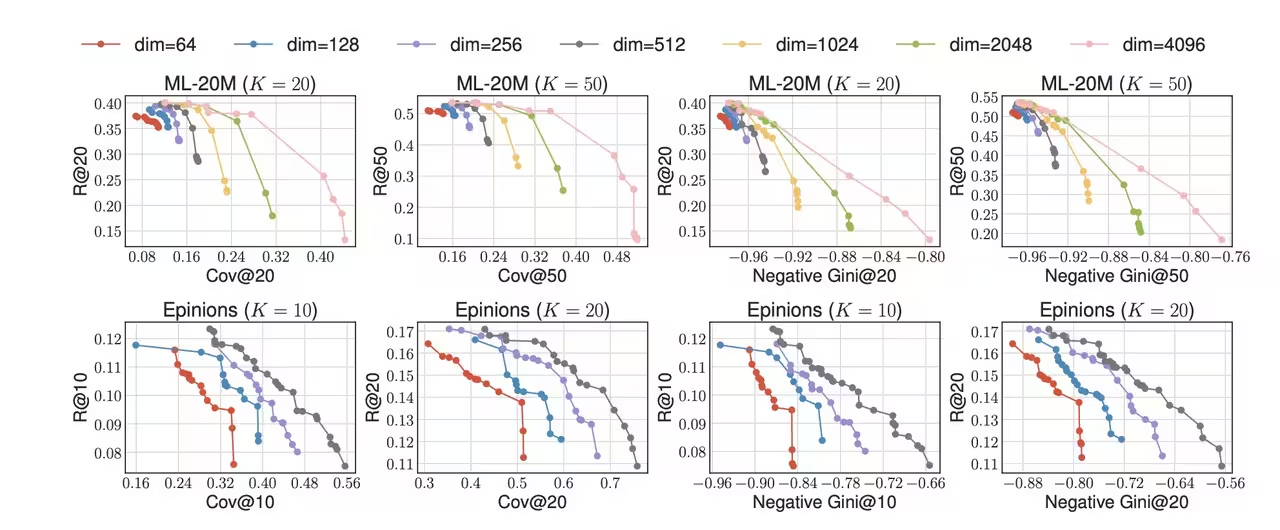

Following figure points out the Diversity and Item Fairness. So, illustrates how the dimensionality of the iALS model impacts catalog coverage and item fairness on the ML-20M dataset. The figure is divided into two rows:

- Top Row: Pareto frontier of Recall@K (R@K) vs. Coverage@K (Cov@K).

- Bottom Row: Pareto frontier of Recall@K (R@K) vs. Negative Gini@K.

Each curve represents a Pareto frontier corresponding to a specific dimensionality setting of the iALS model, optimized under various hyperparameter configurations. These curves highlight trade-offs between ranking quality (R@K) and the secondary metrics (Cov@K and Negative Gini@K).

Recall@K (R@K): A standard metric to evaluate ranking quality, indicating how well the model retrieves relevant items in the top-K recommendations.

Coverage@K (Cov@K): The proportion of items in the catalog that appear at least once in the top-K recommendations across all users. Higher Cov@K indicates better catalog diversity.

Negative Gini@K: A fairness measure that evaluates how evenly items are recommended in the top-K lists. Higher values of Negative Gini@K suggest more fair distribution.

In other words, models with similar ranking quality can have vastly different catalog coverage or fairness. Developers must avoid focusing solely on ranking quality as it could lead to non-diverse, unfair recommendations.

Key Observations

- Impact of Dimensionality:

- Models with higher dimensionality perform better on both catalog coverage (Cov@K) and item fairness (Negative Gini@K).

- Low-dimensional models exhibit limited diversity and fairness, regardless of hyperparameter tuning.

- Pareto Frontier and Trade-offs:

- The Pareto frontier curves show trade-offs between ranking quality (R@K) and secondary objectives like Cov@K and Negative Gini@K.

- Some models achieve similar R@K values but differ substantially in terms of diversity and fairness, demonstrating the importance of multidimensional evaluation.

- Potential Pitfall in Model Selection:

- Developers often prioritize ranking quality (R@K) during model selection, which can favor low-dimensional models due to their computational efficiency and smaller space requirements.

- However, such models can result in extremely nondiverse and unfair recommendations, overlooking critical aspects of fairness and catalog representation.

- Versatility Limitations:

- Even when considering both ranking quality and diversity/fairness, the range of dimensionality tested is often restricted due to computational resource constraints. This limits the versatility of the models.

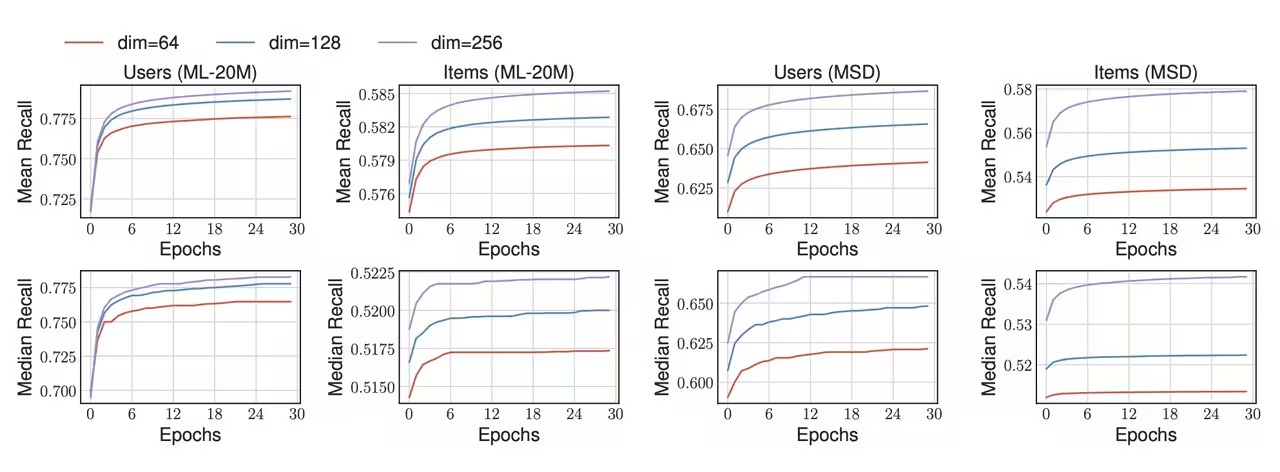

Self-Biased Feedback Loop

To capture the dynamic nature of user interests, a system typically retrains a model after observing data over a specified time interval. However, hyperparameters are often fixed during model retraining, as tuning them can be costly, especially when models are frequently updated. Thus, the robustness of deployed models (including hyperparameters) to dynamic changes in user behavior is critical. This is related to unbiased recommendation and batch learning from logged bandit feedback.

Since user feedback is collected under the currently deployed system, item popularity is influenced partly by the system itself. Consequently, when a system narrows down its effective item catalog, future data tend to concentrate on items that are frequently recommended. This phenomenon accelerates popularity bias in the data and further increases the number of cold-start items.

To observe the effect of dimensionality on data collection within a training and observation loop, they repeatedly trained and evaluated an iALS model with varying dimensionalities on ML-20M and MSD. Following a weak generalization setting, they first observed 50% of the feedback for each user in the original dataset. Then, they trained a model using all samples in the observed dataset and predicted the top-50 rankings for all users, removing the observed user-item pairs from the rankings. Subsequently, they observed the positive pairs in the predicted rankings as additional data for the next model training. During evaluation, they computed the proportion of observed samples for each user, termed “recall” for users, and also calculated this recall measure for items to determine how well the system can collect data for each item.

Key Observations

- Dimensionality Impact on Data Collection:

- High-dimensional models (e.g., ( $d = 128, 256 $)) collect data more efficiently for both users and items compared to low-dimensional models (e.g., ( $d = 64 $)).

- The performance gap is particularly pronounced for items (second and fourth columns), highlighting the limitations of low-dimensional models in item coverage.

- Dataset Size and Catalog Impact:

- The efficiency gap between low- and high-dimensional models is more significant in MSD (bottom row) compared to ML-20M (top row).

- This is attributed to MSD’s larger item catalog, which requires higher dimensionality to ensure sufficient item representation and recall.

- Recall Patterns in MSD:

- In the fourth column (bottom row), models with ($d = 64 $) demonstrate limited item recall, focusing on a small subset of items.

- Models with higher dimensions (e.g., ( $d = 128, 256 $ )) exhibit better performance by achieving higher recall across a broader range of items.

- Epoch-Specific Trends:

- In ML-20M, the performance gap between ( $d = 64 $) and higher-dimensional models (e.g., ( d = 128, 256 )) is small in the first epoch, but it grows significantly in subsequent epochs.

- For MSD, the efficiency gain with higher ( $d $) values is more evident across all epochs, emphasizing the advantages of high-dimensional models in datasets with large catalogs.

- Notable Results with ( $d = 64 $):

- In MSD, models with ( $d = 64 $) achieve reasonable user recall (third column, bottom row) but fail to provide adequate item recall (fourth column, bottom row). This underscores the trade-off between dimensionality and data coverage.

- Training and Observation Loops:

- The results highlight the evolving gap between low- and high-dimensional models during training.

- While academic research often evaluates models in a single epoch, the efficiency of data collection over multiple epochs significantly benefits from higher-dimensional embeddings.

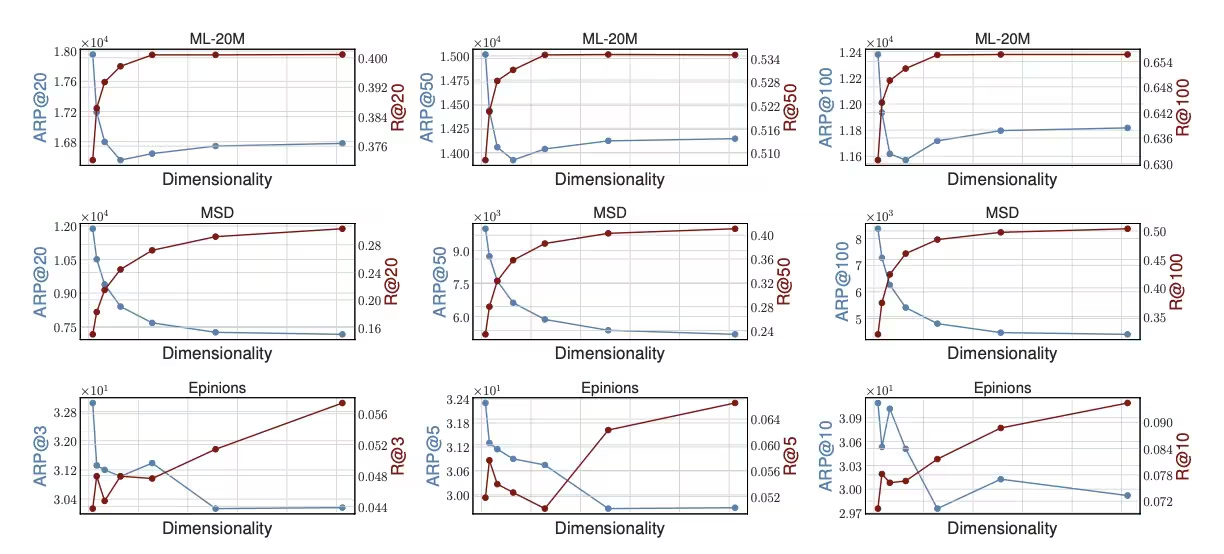

Personalization and Popularity Bias

The figure above illustrates illustrates the trade-off between personalization (measured as Recall@K) and popularity bias (measured as ARP@K) for iALS models across different embedding sizes ( $d$ ). In other words, it highlights the limitations of low-dimensional embeddings in balancing personalization and popularity bias. Higher-dimensional models are more effective at mitigating this bias, enabling recommendations that better align with individual user preferences.

Key Observations

- Low-Dimensional Models:

- Models with small embedding sizes (e.g., ( $d = 64, 128 $)) exhibit high ARP@K values (blue lines), indicating a strong bias toward recommending popular items.

- This bias is especially pronounced for the top-ranked items (leftmost figures in each row), reflecting a lack of personalization.

- High-Dimensional Models:

- As ( $d$ ) increases (e.g., ( $d = 512, 4096 $)), the ARP@K values decrease, meaning the model shifts from recommending overly popular items to more personalized and diverse recommendations.

- These models achieve a better balance between Recall@K and ARP@K.

- Dataset-Specific Trends:

- The tuning range for ( $d$ ) varies across datasets to maintain the low-rank condition of matrix factorization:

- ML-20M and MSD: ( d $\in$ {64, 128, …, 4096} ).

- Epinions: ( d $\in$ {8, 16, …, 512} ).

- Datasets with larger item catalogs (e.g., MSD) benefit more from larger embeddings.

- The tuning range for ( $d$ ) varies across datasets to maintain the low-rank condition of matrix factorization:

- Anti-Personalization in Low-Dimensional Models:

- The popularity bias is severe for smaller ( $d$ ), where recommendations prioritize frequently occurring items in the training data, reducing personalization.

5. Conclusion

This paper offers a valuable blend of theoretical analysis and practical relevance, providing a deep understanding of the limitations of dot-product models in recommendation systems and presenting actionable insights for their improvement.

The core conclusion drawn from the empirical evidence is the importance of sufficient dimensionality in user/item embeddings to enhance the quality of recommender systems. Low-dimensional embeddings tend to amplify a popularity bias, widening the ranking gap between popular items and long-tail (less popular or niche) items. This effect is particularly notable because lower dimensionality limits the system’s ability to represent diverse rankings, leading to a preference for more popular items, often at the expense of long-tail ones.

From a theoretical perspective, the power of dot-product models is constrained by the dimensionality of item factors and the inherent bias introduced by low dimensionality. Dot-product models with low-dimensional item factors are restricted by the rank of the interaction matrix and the number of representable rankings, which are governed by hyperplane arrangements in the latent space. As the dimensionality increases, the model can capture a wider range of interaction patterns, though this comes with the trade-off of increased complexity and the potential for overfitting.

Applications and Open Problems

The results have practical implications for improving recommendation systems using higher-dimensional spaces or alternative methods. Future research could refine the bounds on representable rankings and explore methods for handling long-tail items in ranking models.

Key Messages:

-

Dimensionality Matters: The dimensionality of item and query embeddings is critical to a ranking model’s expressiveness. Higher-dimensional spaces enable more complex and varied rankings, whereas low-dimensional spaces limit the diversity of rankings, often leading to reduced catalog coverage and the reinforcement of popularity bias.

-

Popularity Bias in Low Dimensions: Low-dimensional spaces inherently favor popular items in the rankings. The study formalizes this through geometric interpretations, illustrating how a small set of popular items can dominate the rankings, crowding out long-tail items and causing the system to be inherently biased toward already popular items.

-

Hyperplane Arrangements and Facets: Rankings are shaped by the partitioning of the query space via hyperplanes. As dimensionality increases, so does the number of regions (and, thus, rankings) within the space. The number of facets on the polytope formed by item vectors directly impacts how diverse the rankings can be.

-

Upper and Lower Bounds on Ranking Representation: The study establishes upper and lower bounds on the number of representable rankings. In low-dimensional spaces, the expressiveness of the model is severely constrained, while higher-dimensional spaces allow for the representation of many more distinct rankings.

Review and Opinion:

Many real-world recommender systems opt for low-dimensional embeddings to reduce computational costs, but this often comes at the expense of diversity and fairness in recommendations. The paper provides a clear geometric intuition for why low-dimensional spaces fail to capture complex ranking relationships, offering a compelling direction for improvement. The findings suggest that increasing dimensionality or adopting alternative models, such as graph-based methods, could help alleviate some of these issues.

The exploration of popularity bias through hyperplane arrangements is particularly insightful, as it links a common challenge in recommender systems to a formal mathematical framework. This approach could pave the way for new techniques that seek to balance the representation of popular and long-tail items in the rankings.

This study places a strong emphasis on understanding the expressive power of dot-product models, particularly when the dimensionality of the latent space is limited. The use of geometric and combinatorial methods (e.g., hyperplane arrangements, convex polyhedra) provides a robust theoretical foundation for the empirical observations made in the paper. Additionally, the research highlights how dimensionality directly affects the diversity and fairness of recommendations, offering clear insights into why certain systems may face limitations in this regard.

Note: In this review, I chose not to delve into the mathematical proofs and derivations, instead focusing on the practical implications and the overall contribution of the theorems to the experiments and the core ideas behind the paper.

Author Information

- Author Name: Zeynep ALTINER

- Major: Industrial and Systems Engineering

- Research Topic: Multi-modality, multi-modal embedding